The Impact of Bursty Packet Loss on Audio Quality in WebRTC

Ensuring high-quality audio in WebRTC encounters a pivotal challenge amidst less than ideal network conditions, predominantly driven by the burstiness of packet loss. This phenomenon is prevalent in congested networks, areas with low mobile coverage, and public Wi-Fi setups.

Within the WebRTC framework, an array of strategies exists to mitigate packet loss, yet their efficacy varies depending on the specific network dynamics. Among the most prevalent techniques are:

- OPUS Forward Error Correction (FEC): Each audio packet incorporates low-bitrate data from preceding packet, facilitating potential recovery in the event of a single packet lost.

- Packet Retransmissions: Leveraging standard NACK/RTX mechanisms, the receiver requests retransmission upon detecting packet sequence gaps.

- Packet Duplication: Sending multiple instances of the same packet aims to compensate for potential losses. It is like sending preemptive retransmissions to mitigate the impact of potential packet loss.

- Redundancy (RED): This technique entails embedding encoded audio for the current packet along with copies of previous packets within each packet, typically following the RFC2198 format.

- Packet Loss Concealment: Employing various concealment techniques such as temporal audio stretching or neural network approximations to mitigate the impact of lost packets.

- Deep Redundancy (DRED): Integrating a neural network encoder to provide lower bitrate copies of previous packets along with the current packet encoded with high quality as usual.

However, the effectiveness of these techniques hinges on network latency and the magnitude of consecutive packet losses. For instance, retransmissions suffer under high latency, while OPUS FEC and short RED patterns are not effective when faced with extensive consecutive packet losses. Hence, discerning the network’s characteristics is imperative to determine the most suitable approach for optimal results.

Analyzing Packet Loss Bursts in Real Networks

Exploring consecutive packet losses provides valuable insights into network behavior. How prevalent are these bursts in actual networks, and what magnitude do they typically reach?

Traditionally, answering these questions posed a challenge. However, with the advent of the new network simulator developed for the Opus 1.5 release and leveraging data from Microsoft, we now have a simple tool to estimate these parameters more readily.

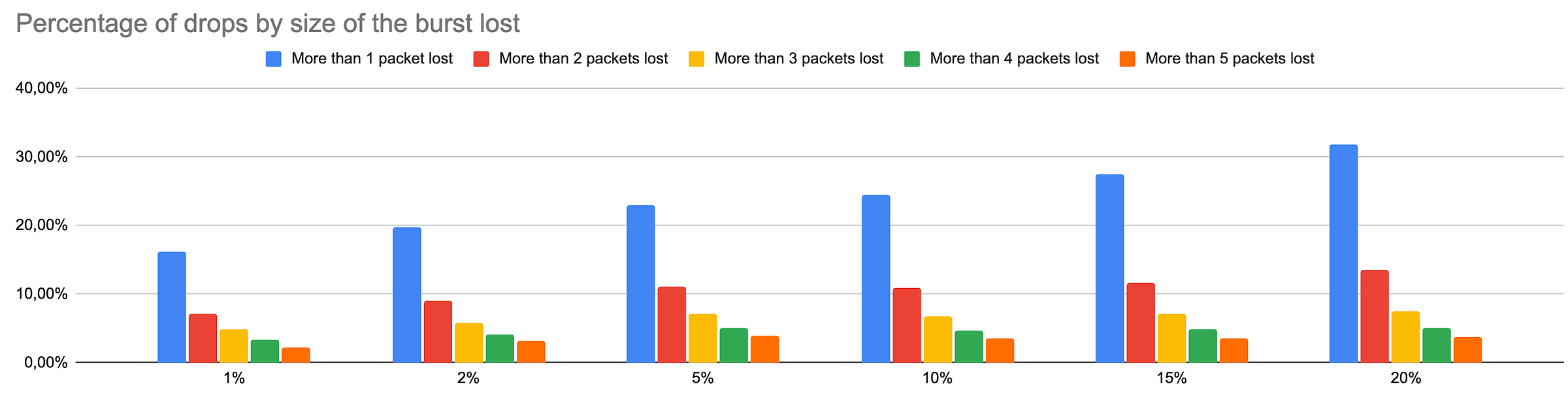

I used that simulator to run some tests with different packet loss conditions and extract some statistics. The graph below illustrates the extent of loss bursts relative to the average packet loss percentage in a network.

For instance, in a network experiencing 1% packet loss, approximately 16% of losses entail more than one consecutive packet loss. Consequently, employing techniques like OPUS FEC or redundancy with a single packet may prove less effective in such scenarios. Similarly, in networks with 20% packet loss, approximately 5% of losses involve five or more consecutive packet losses so we would need to add a lot of latency to take advantage of redundancy in those cases.

Given that the efficacy of mitigation techniques depends on the nature of packet loss, the next logical query arises: How can we discern whether packet loss is bursty or not? Traditional RTCP feedback provides insights into the average percentage of loss but lacks details regarding loss patterns. To address this, several approaches exist:

- Assuming Bursty Packet Loss: This prevalent approach assumes that the length of packet loss gaps correlates with the overall packet loss rate.

- Transport CC Feedback: Although less common, this method involves measuring burstiness based on Transport Congestion Control feedback, offering a theoretically sound approach.

- RTCP Extended Reports (XR): Leveraging XR reports enables the explicit inclusion of information regarding packet loss bursts.

Understanding the burstiness of packet loss is crucial for devising effective strategies to mitigate its impact on audio quality in WebRTC.

The decision regarding which techniques to implement is nuanced and far from black and white. Various combinations can be effective, but in my view, a blend of DRED, retransmissions, and PLC strikes a balance between maintaining quality, managing latency, and optimizing bitrate utilization across diverse network conditions. I’m eager to hear about others’ experiences and insights on this matter.

Comments

Post a Comment