WebRTC Architecture that keeps working when us-east-1 goes down

This week AWS had an outage in the northern Virginia region, probably the most commonly used region and many of the most popular services in the world were affected.

It was at least the third time in five years that AWS’s northern Virginia cluster, known as US-EAST-1, contributed to a major internet meltdown.

Every service is different, and nowadays, with so many dependencies and coupling, it is hard not to be affected by an incident like this. However, specifically for WebRTC platforms, it should be relatively straightforward to handle cases where one specific AWS region goes down.

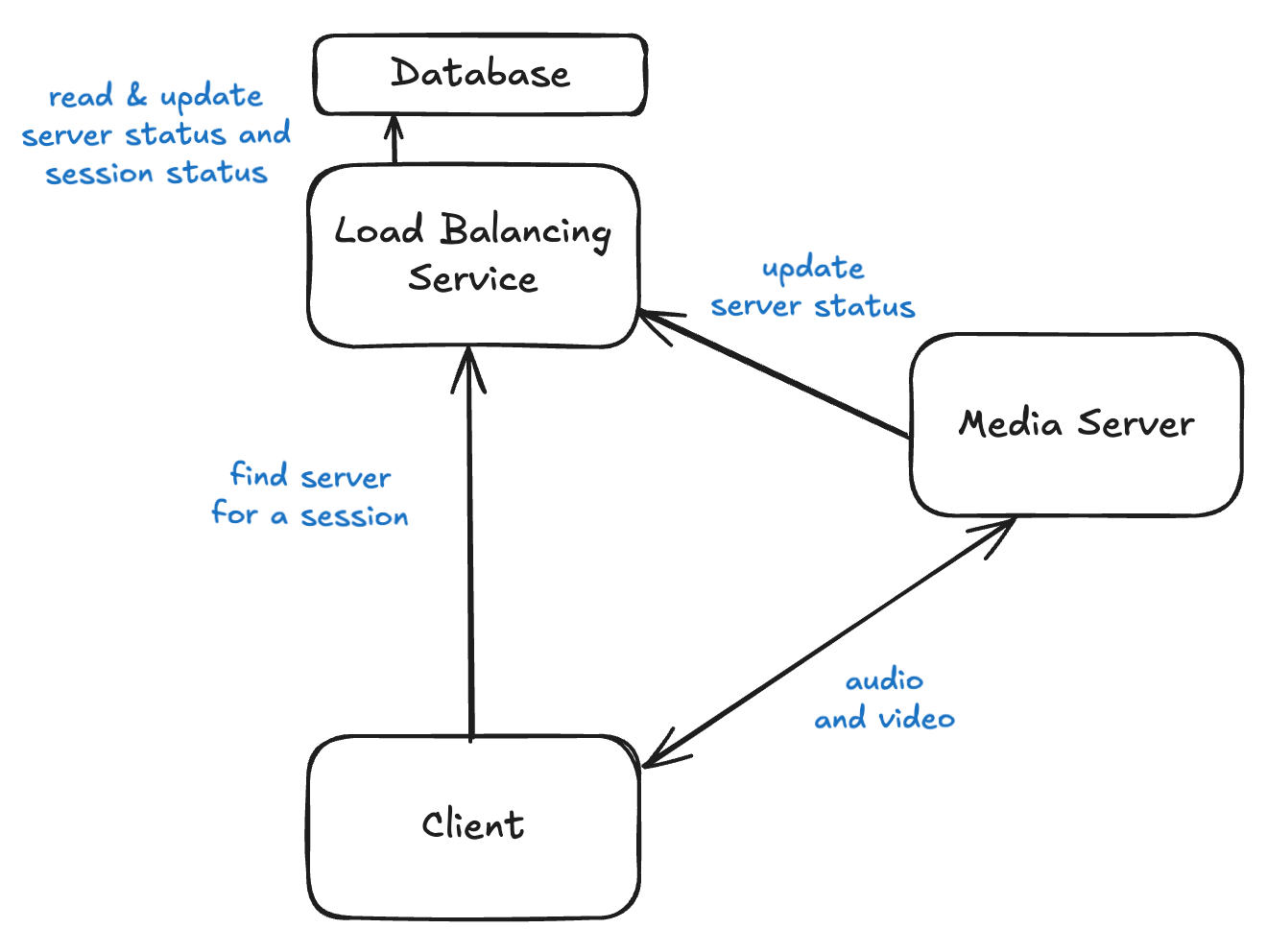

Basic WebRTC Architecture

The basic architecture of most of WebRTC platforms comprises four components:

- Load balancing service: The client application’s initial point of contact, selecting the appropriate media server for a session.

- Database: Stores server information (status, load, location) and session-to-server mappings, often using a fast database like Redis.

- Signaling service: Manages session state and forwards control messages. For this discussion, it’s assumed to be part of the media server and ignored in the following diagrams.

- Media forwarding service: The core component, routing audio and video among participants. It’s typically stateless and reports its status.

The whole solution usually includes other components to manage things like recording or firewall traversal but those are ignored for the purpose of this discussion and anyway usually they are stateless and easy to make them robust in case of a network issue.

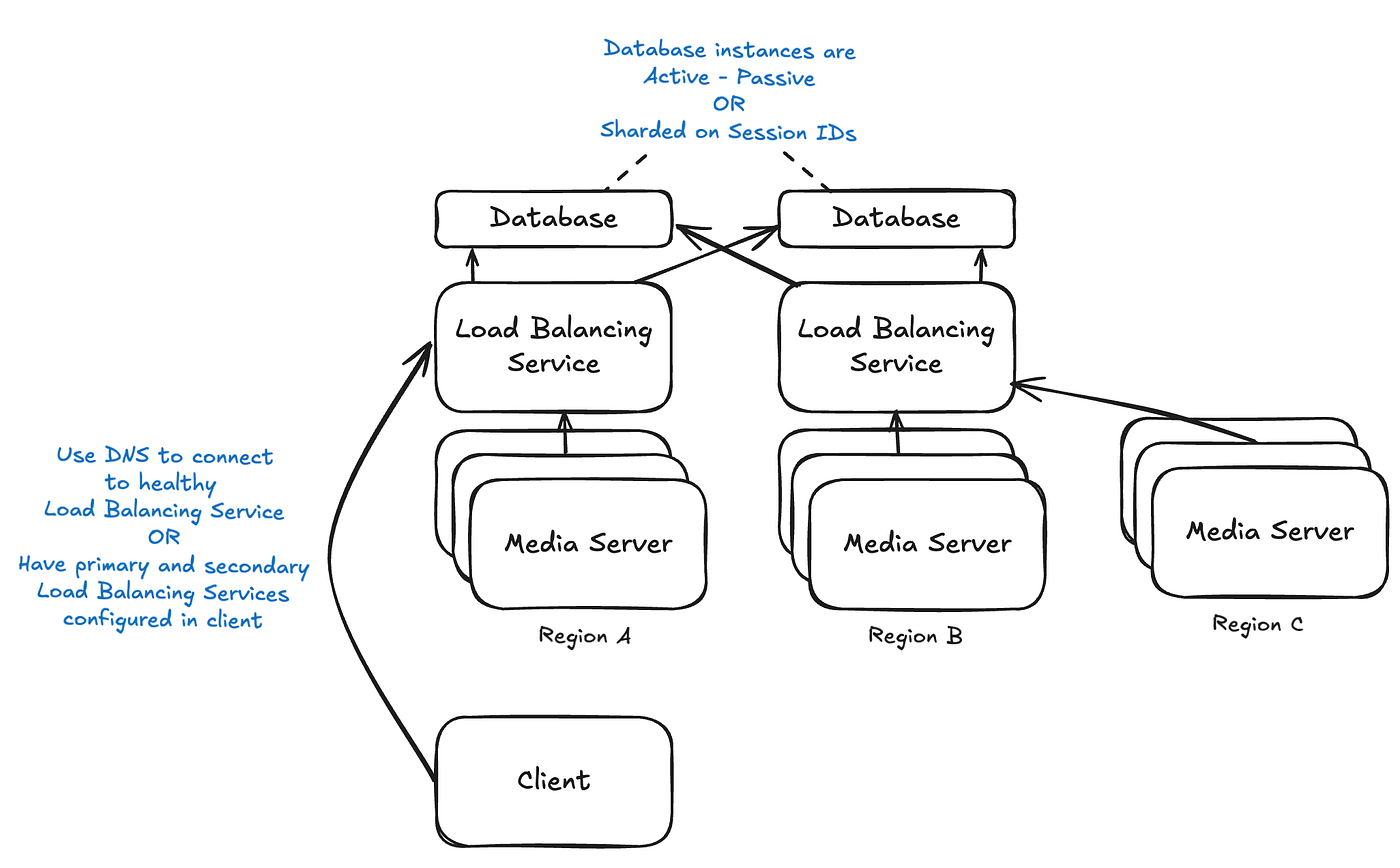

Distributed WebRTC Architecture

When you want to provide the best possible quality, you deploy media servers in multiple regions, and your architecture typically looks like this:

This is the most common deployment in my experience, but it is obvious that there is a central point of failure that can be the database or the Load Balancing service + Database depending on the way you deploy it.

Robust distributed architecture

If the Load Balancing service and the Database are the problem in case of outage in the region where they are located the solution looks obvious, let’s just duplicate them.

Replicating the Load Balancing service is easy, deploy it to as many regions as needed (ideally all of them) and use one of these approaches to failover to a different Load Balancer in case there is an outage in your closest region:

- Rely on health checks and short DNS timeouts. That way if there is an outage the DNS servers will mark some region as unavailable and route client requests to a different one.. This can be in problem in case of an outage affecting the DNS service (like Route53).

- Have clients configured to fallback to different url (for example api.domain.com and api-backup.domain.com)

Replicating the database is a little bit harder, the most common approaches are these ones:

- The easiest way is to do it in an active/passive way, where multiple instances of the database are available, but only one is used at a given time, with the others just replicating the information. In case of an outage, we move the queries manually or automatically with some monitoring process to use one of those replicas instead.

- A more evolved approach is active/active using sharding. It is more complex but possible and gives the additional advantage of distributing the load.

- You can use an already distributed database like Azure Spanner. This can be tricky in case this distributed database has some central component, as apparently happened with DynamoDB in the case of the last AWS outage.

This is how the final architecture looks like:

With the solutions proposed above your WebRTC infrastructure should be resilient to all the problems affecting a single region in your cloud provider.

It is possible to go further and for example deploy on multiple providers (AWS, GCP, Azure…) or using your own infrastructure but that’s not something I would recommend unless it is a really critical piece of software or you need it for other reasons like cost or compliance.

Comments

Post a Comment