Screensharing content detection

One interesting feature in WebRTC is the ability to configure a content hint for the media tracks so that WebRTC can optimize the transmission for that specific type of content. That way if the content hint is "text" it will try to optimize the transmission for readability and if the content hint is "motion" it will try to optimize the transmission for fluidity even if that means reducing the resolution or the definition of the video.

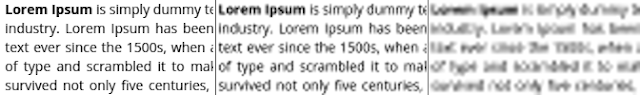

This is specially useful when sharing documents or slides where the "crispiness" of the text is very important for the user experience. You can see the impact of those hints in the video encoding in this screenshot taken from the W3C spec:

Simplest approach

The idea is that when you are sending static content (slides) WebRTC notices that the frame didn't change from the previous one and skips sending a new one so it ends up sending ~1 frame per second only.

With this approach just checking the framerate sent using the getStats() API we could have a high level of confidence on the content is static. For example, we could use a threshold of 2-3 fps to differentiate static and dynamic content.

Special cases:

- You are sending a low framerate because the available bandwidth is too low and not because the content is static. I don't think libwebrtc will send such a low framerate in that case but anyway we can differentiate this case by checking the "availableOutgoingBitrate" and/or "qualityLimitationReason" the WebRTC stats.

- When you move the cursor over the slides, or your slides have a small animated logo in one corner or you are sharing a text editor but you have a small blinking cursor. In those cases this approach won't work because the framerate sent will be high.

Elaborated approach

The idea is that when you are sending static images you can send a higher quality with less number of bits because the image doesn't change from one frame to the next one so it needs fewer pixels to be encoded.

We can use the framerate, qp (quantization parameter), and resolution statistics from WebRTC and calculate a number that intuitively shows Quality / BitsUsedPerPixel.

You can see the implementation in this jsfiddle: https://jsfiddle.net/zb8w4nd5/3/

I tested both approaches using a document with two slides, one static with text and one image and one with a youtube video embedded. The results are shown in the next graph.

One thing we can see in the graph is that the framerate is a good proxy apart from when there is some "artificial" movement like the mouse cursor. In that sense, the Quality factor looks like an approach with fewer issues.

As you can see in the graph after switching from the video slide to the text slide again it took a bit to recover in terms of quality. Combining both approaches and using the framerate in that case could make the detection faster.

Additional considerations:

- Probably is a good idea to ignore the first few seconds as it can take a bit to stabilize.

- Add some hysteresis or window average to avoid detecting when switching slides or scrolling in a text document.

- The Quality factor is assuming everything is linear and maybe other approaches (logarithmic, quadratic...) could have more accurate results.

- I haven't used this in a real product yet, so take it just as a list of ideas to explore and not a finished reliable algorithm.

Noteworthy: https://github.com/w3c/mediacapture-handle/issues/35

ReplyDeleteThe article you've shared here is fantastic because it provides some excellent information that will be incredibly beneficial to me. Thank you for sharing about Voice Over Ip Consultant. Keep up the good work.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteTop-rated dentist in kitchener waterloo by highly skilled dentists, providing exceptional care for your oral health needs

ReplyDelete