Different types of latency measurements in WebRTC

When building WebRTC services one of the most important metrics to measure the user experience is the latency of the communications. The latency is important because it has an impact on the conversational interactivity but also on video quality when using retransmissions (that is the most common case) because the effectiveness of retransmissions depend on how fast you get them.

And to be fair at the end of the day latency is what differentiates Real Time Communications from other types of communications and protocols like the ones used for streaming use cases that are less sensitive to delays, so it is clear that latency is an important metric to track.

However there is no single measurement of latency and different platforms, APIs and people usually measure different types of latency. From what I've seen in the past we can see differences in these four axis described below.

One Hop latency vs End to End latency

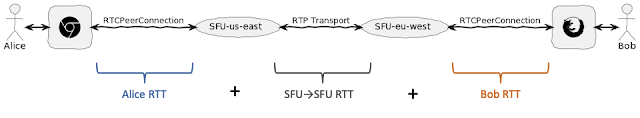

When there are multiple servers involved in a conversation the native WebRTC stats only provides information about the latency in the first hop (from the client to the server). That doesn't give you the full picture and usually what you prefer is to measure the end to end delay including the latency from the first client to the first server, then from each server to the next one and finally from the last server to the other client.

This can be seen in this great diagram from webrtchacks.

Mesuring the End-to-End latency is much harder as it is not provided automatically by WebRTC stacks and requires some additional ping-pong messages to be sent (f.e. using datachannels) or a mechanism relaying in synchronized clocks that it is not very practical.

Real Users latency vs Synthetic Traffic latency

Some people use synthetic tests to measure the latency provided by their platform. That's great for alerting purposes but doesn't give you the full picture of how users are experiencing your service. For example maybe there is one specific region from where users are experiencing high latencies but you don't have your test clients probing your platform from that very specific region.

When measuring latency for real users you typically have to use the WebRTC getStats API or some datachannels ping-pong messaging but when using synthetic traffic you have more freedom to use special custom RTP packets or a video source with a timestamp watermark.

Network latency or Capture-to-Render latency

When measuring the latency typically you measure the delay of the packets in the network but that's not the whole latency that a user will experience for his voice and video. Apart from that network latency there are also delays introduced by the software doing the capture and rendering. This is specially relevant in case of the buffers used in the receiver side to mitigate network issues like jitter, packet loss or packet reordering.

To measure the whole latency including the delays introduced in the capturing and rendering sides you can include in your calculations some additional metrics provided by the WebRTC getStats API like the jitterBufferDelay, playoutDelay, packetSendDelay...

Round Trip Time or One Way delay

Another small difference is if the latency is measured as round trip time latency (the sum of both directions A->B + B->A) or just one of the ways independently. In most of the cases what is measured is the round trip time and then just divided by two as a good estimation of the one way delay.Conclussions

As a summary, next time you provide numbers on the latency of your WebRTC solution please explain what type of latency you are measuring and how are you measuring to make it more clear 🙏.

If you can you should probably try to measure the end-to-end delay but even the hop-by-hop latency is very relevant for monitoring and troubleshooting. If you have enough traffic it is possible that real users latency is what you want to measure, but if you don't have enough users or you want to have some more data then using synthetic tests to estimate the latencies is also a good approach.

Comments

Post a Comment