Loss based bandwidth estimation in WebRTC

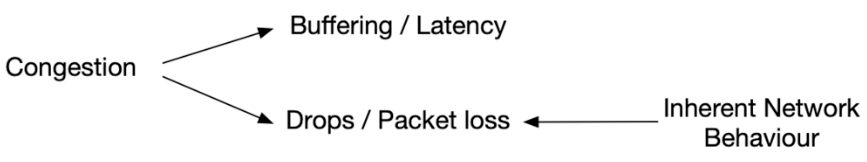

Measuring available bandwidth and avoiding congestion is the most critical and complex part of the video pipeline in WebRTC. The concept of bandwidth estimation (BWE) is simple: monitor packet latency, and if latency increases or packet loss occurs, back off and send less data. The first part is known as delay-based estimation, while the second part, less known, is referred to as loss-based estimation.

In the original implementation of WebRTC, the logic for loss-based estimation was straightforward: if there was more than 2% packet loss don't increase the bitrate sent and if it is more than 10% reduce the bitrate being sent. However, this naive approach had a flaw. Some networks also experience packet loss not due to congestion but inherent to the network itself (e.g., certain WiFi networks). We call that packet loss static or inherent packet loss.

- Basic loss based bwe (~2011): Based on static thresholds for packet loss to increase / decrease bwe. Source Code

- Loss based bwe v1 (~2019): Dynamic thresholds for packet loss and smoothing of measurements. Source Code

- Loss based bwe v2 (2023): Maximum likelihood modeling from candidates based on current packet loss and trends. Source Code, Tracker Ticket

This post will focus on describing that newest solution (loss based bwe v2) enabled this year in Chrome and all the browsers and clients based on the same library.

Loss based BWE v2

At a very high level this diagram shows how loss based bwe is integrated in the estimation logic and what are the inputs taken into account. The loss based estimation takes as input the estimation from delay based estimation and based on that an the packet loss feedback received from the packets being sent it will try to provide a final estimation.

The new loss based BWE v2 runs the following steps every time it needs to provide a new estimation:

1/ First, it generates a list of potential candidates that describe the network's (also known as channel's) characteristics. In this case, the characteristics considered are the available bandwidth and the inherent packet loss of the network.

1.a. Bandwidth candidates are sourced from different areas. Essentially, delay-based estimations, the most recent loss-based estimations, and the bitrate being sent are all considered. These are then multiplied by certain factors (0.95, 1, 1.02) to generate potential additional new bandwidth candidates.

1.b. The inherent packet loss candidate is derived from the latest estimation. It is updated based on the trend of recent observations, using Newton's update method which is based on first and second derivatives. Additionally, there are limits based on the sent bitrate - at higher bitrates, the inherent packet loss should not be too high.

2/ Second, the algorithm selects the candidate that can most accurately explain the packet loss in the most recent observations. For that it calculates an objective score for each candidate and this is the maximum likelihood modeling aspect of the algorithm.

Those two steps can be seen in this snippet of the real code that summarize the concept of the algorithm:

ChannelParameters best_candidate = current_best_estimate_;

double objective_max = std::numeric_limits<double>::lowest();

for (ChannelParameters candidate : GetCandidates(in_alr)) {

NewtonsMethodUpdate(candidate);

const double candidate_objective = GetObjective(candidate);

if (candidate_objective > objective_max) {

objective_max = candidate_objective;

best_candidate = candidate;

}

}

The bandwidth of the chosen candidate with the highest objective score in this last step is then reported as the final estimation that will be used to decide how much video to encode and send to the network.

Although the algorithm appears simple based on this description, the coding process is far from trivial. Despite its relatively small size, it features over 30 tuneable parameters. If you're skeptical, you can review the issue tracker history. You'll see it took more than two years to develop, optimise, and deploy this new feature to production.

What do you think? As usual feedback is more than welcomed either here or in Twitter.

Comments

Post a Comment